The History of AI: From Early Visions to Modern-Day Innovations

Artificial Intelligence (AI) has become one of the most transformative technologies of the 21st century. Rooted in decades of research and trial-and-error, AI has evolved from an academic curiosity to a force reshaping economies, industries, and global society. Below is a concise look at AI’s journey—from its conceptual seeds to its rapid ascent into modern deep learning and beyond.

Early Roots and Foundational Ideas

The concept of intelligent artificial beings can be traced back to folklore and mythic tales. However, the formal groundwork for AI emerged in the mid-20th century, paving the way for milestones like the Turing test:

- Alan Turing’s Influence (1950): British mathematician Alan Turing is widely acknowledged for kick-starting AI research. In his paper “Computing Machinery and Intelligence”, he introduced the Turing Test—an innovative way to gauge machine intelligence by comparing it to human conversational abilities.

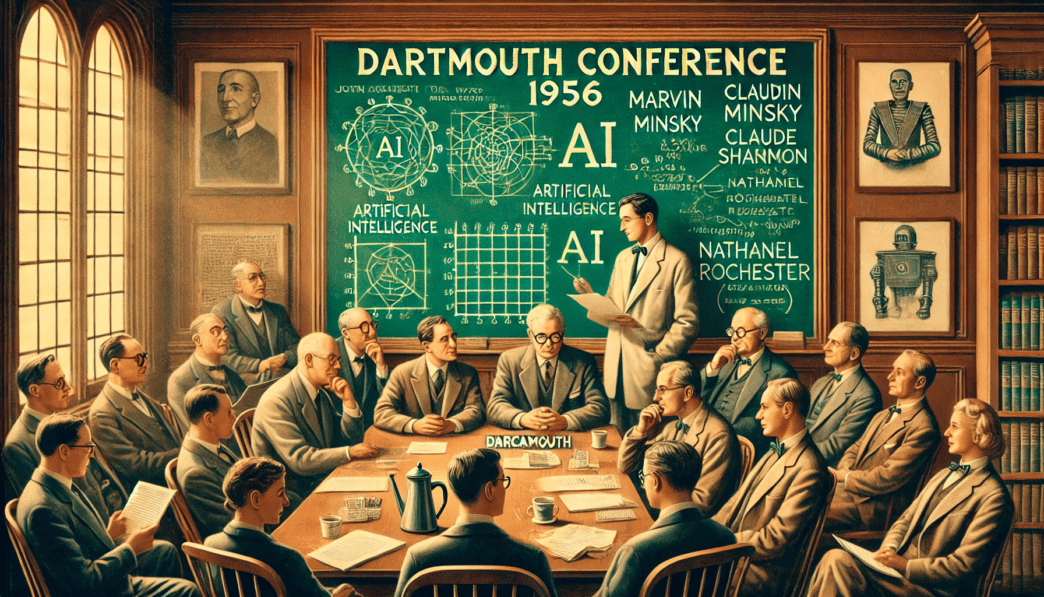

- Dartmouth Conference (1956): Researchers John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon convened to discuss whether machines could simulate human intelligence. During this event, the term “Artificial Intelligence” was officially coined, and AI was established as an academic field.

Note: Picture this historic conference as a group of tech visionaries sipping tea and saying, “So… can machines really think, or what?” Indeed, they decided to find out.

Symbolic AI and Early Achievements

The Rise of Rule-Based Systems

Throughout the 1960s and 1970s, symbolic AI—which used formal rules and logical reasoning—dominated. Researchers made strides in:

- Robotics: Early prototypes explored mechanical tasks with programmed instructions.

- Expert Systems: Machines that emulated human experts (e.g., medical diagnosis or engineering) by following “if-then” rules.

- Natural Language Processing: Efforts aimed at enabling computers to understand and produce human language.

However, symbolic AI struggled with ambiguity and the inherent messiness of real-world data. As a result, it faltered under more complex tasks.

The First “AI Winter”

By the late 1970s and into the 1980s, inflated expectations for AI led to disappointment, a recurring theme in the history of artificial intelligence:

- Funding Cuts: Government and private institutions reduced budgets when symbolic AI projects failed to meet grandiose promises.

- Hardware Limitations: have historically hindered the development of artificial general intelligence: Computers of the era weren’t powerful enough to run large-scale AI simulations effectively.

- Loss of Interest: Many researchers shifted their focus, and the field temporarily stalled.

Expert Systems and Temporary Revival

The early 1980s saw a surge in expert systems—rule-based programs that imitated the specialized knowledge of human professionals. This resurgence hinted at new promise:

- Commercial Success: Industries used these systems for tasks like financial analysis or medical guidance, showcasing the growing interest in AI applications:

- Japan’s Fifth Generation Project: Signaled international investment, aiming to create computers that achieved advanced AI functionalities.

Yet expert systems had limitations: they lacked self-learning capabilities and couldn’t adapt beyond their pre-programmed rules. Once complexities or out-of-scope scenarios appeared, the systems often failed, highlighting the limitations of artificial neural networks:

Shift Toward Machine Learning

Data-Centric Approaches

In the late 1990s and early 2000s, AI research pivoted from rigid, rule-based methods to data-centric machine learning:

- Increasing Computational Power: has been a driving force behind the rapid advancements in artificial neural networks: As digital data surged and hardware advanced, AI systems could finally handle larger datasets.

- Neural Networks: Modeled loosely on the human brain, these networks gained traction—though early versions were shallow by today’s standards.

Note: It’s as if AI researchers traded in dusty instruction manuals for endless buffet tables of data—feeding algorithms until they learned new tricks.

The Deep Learning Revolution

Deep learning emerged in the late 2000s and early 2010s, proving game-changing for AI’s capabilities, particularly in the realm of generative AI:

- Multi-Layer Neural Networks: have enabled breakthroughs in AI, allowing systems to learn complex patterns akin to those seen in Deep Blue: These deeper architectures could find complex patterns in images, text, and speech, marking a significant leap in the capabilities of generative AI:

- Corporate Investment: Tech giants like Google, Facebook, and Microsoft poured resources into AI labs.

- Successful Applications: Breakthroughs in image recognition, natural language processing, speech recognition, and autonomous vehicles underscored deep learning’s power.

AI’s Ubiquitous Presence

In today’s world, AI appears virtually everywhere:

- Virtual Assistants & Chatbots: Tools like Siri, Alexa, and advanced language models handle questions and tasks.

- Facial Recognition & Surveillance: Systems can identify individuals in photos and videos—sparking both awe and debate.

- Healthcare & Diagnostics: AI aids in interpreting medical imaging, drug discovery, and patient monitoring.

- Business & Finance: Algorithmic trading, customer service automation, and fraud detection rely heavily on machine intelligence.

Ethical and Societal Concerns

Rapid progress in AI has also triggered questions and controversies:

- Algorithmic Bias: Training data can produce unfair or discriminatory outcomes, raising ethical concerns in the pursuit of artificial general intelligence:

- Data Privacy: As AI systems consume massive datasets, data protection becomes a critical issue.

- Job Displacement: Automation risks disrupting various industries and workforces.

- Weaponization and Surveillance: Concerns arise over autonomous weapon systems and extensive monitoring infrastructure.

International bodies, governments, and organizations now collaborate on AI regulations and ethical standards, aiming for responsible innovation.

The Road Ahead

Looking forward, breakthroughs in general AI, quantum computing, and neuromorphic engineering promise to expand AI’s reach across countless sectors—from personalized medicine to next-level transportation. While challenges remain, the field continues to grow, offering transformative solutions that could reshape human society:

- Healthcare: Advanced algorithms might predict illnesses or tailor treatments.

- Finance: Risk assessment and fraud detection could become ever more accurate.

- Sustainability: in AI development is becoming increasingly important as we strive for responsible use of artificial intelligence technologies: AI-driven climate models may offer better resource management or disaster responses.

Note: If there’s one takeaway, it’s that AI is likely to keep “wowing” the world—hopefully minus any rebellious robots plotting a global takeover, reminiscent of the history of artificial intelligence:

Conclusion

The evolution of AI—from symbolic logic and early expert systems to deep learning’s data-driven approach—has been marked by periods of exuberance and setbacks. Today’s AI landscape brims with possibilities and risks alike. As businesses, governments, and researchers forge ahead, ethics and responsible use remain front and center. Ultimately, AI’s ever-advancing capabilities hold the potential to transform society in ways that were once the realm of science fiction, underscoring the field’s profound influence now and in the future.

Q: What is the history of artificial intelligence?

A: The history of artificial intelligence (AI) dates back to ancient times when myths and stories of intelligent automatons appeared. However, the field began formally in the mid-20th century, particularly after the 1956 Dartmouth Conference, which is often considered the birth of artificial intelligence. Key milestones include the development of early AI programs, the Turing Test proposed by Alan Turing, and significant advancements in machine learning.

Q: Who was Alan Turing and what was his contribution to AI?

A: Alan Turing was a mathematician and logician who is widely regarded as one of the founding figures in computer science and artificial intelligence. His most notable contribution to AI is the Turing Test, which assesses a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. Turing’s work laid the groundwork for future developments in AI technology.

Q: What was the first AI program ever created?

A: The first AI program is often credited to Allen Newell and Herbert A. Simon, who developed the Logic Theorist in 1956. This program was designed to mimic the problem-solving skills of a human and is considered one of the earliest examples of artificial intelligence.

Q: What is the Turing Test and why is it important in AI?

A: The Turing Test is a measure proposed by Alan Turing to evaluate a machine’s ability to exhibit intelligent behavior. If a human evaluator cannot reliably distinguish between a machine and a human based solely on their responses, the machine is said to have passed the Turing Test. It remains a significant benchmark in the field of artificial intelligence.

Q: Can you explain the timeline of artificial intelligence?

A: The timeline of artificial intelligence includes several key milestones: the Dartmouth Conference in 1956 marked the birth of AI as a field, the development of the first AI chatbot, ELIZA, in the 1960s, advancements in neural networks in the 1980s, and the resurgence of AI through deep learning in the 2010s. Each phase has contributed to the gradual evolution of AI technologies.

Q: What were the AI winters and why did they occur?

A: AI winters refer to periods in the history of artificial intelligence when interest and funding in AI research significantly declined. The first AI winter occurred in the 1970s due to unmet expectations and limited computational power. The second AI winter happened in the late 1980s and early 1990s, driven by the limitations of early AI systems and a lack of practical applications. These cycles of optimism and disappointment have shaped the development in AI.

Q: How has the development in AI influenced society?

A: The advancement of artificial intelligence has profoundly influenced society in various ways, including automation of jobs, improvements in healthcare through predictive analytics, and the development of AI programs that enhance user experience, such as virtual assistants and chatbots. The future of AI promises even more integration into daily life, but it also raises ethical considerations regarding privacy and job displacement.

Q: What does the future of AI look like?

A: The future of AI is expected to be characterized by more sophisticated algorithms, improved machine learning techniques, and broader applications across various industries. There is potential for powerful AI systems that could assist in solving complex global challenges. However, ethical implications and the need for regulations will be crucial topics in the ongoing discussion about the future of artificial intelligence.

Q: What role does early AI play in the development of modern AI?

A: Early AI, including foundational concepts like artificial neurons and simple algorithms, paved the way for the development of modern AI technologies. Innovations from early AI research laid the groundwork for contemporary approaches like deep learning and neural networks, which are integral to today’s advancements in artificial intelligence.